Lesson 4: Biowulf modules, swarm, and batch jobs

Quick review

The previous lessons have taught participants how to connect to Biowulf and navigagte through the environment.

Learning objectives

After this lesson, participants should be able to

- Find bioinformatics applications that are installed on Biowulf

- Load applications that are installed on Biowulf

- Describe the Biowulf batch system

- Use nano to edit files

- Use swarm to submit a group of commands to the Biowulf batch system

- Submit a script to the Biowlf batch system

Commands that will be discussed

module avail: list available applications on Biowulfmodule spider: list available applications on Biowulfmodule what is: get application descriptionmodule load: load an applicationnano: open the Unix text editor to edit filestouch: create a blank text file

Before getting started

Sign onto Biowulf using the assigned student account. Remember, Windows users will need to open the Command Prompt and Mac users will need to open the Terminal. Also remember to connect to the NIH network either by being on campus or through VPN before attempting to sign in. The command to sign in to Biowulf is below, where username should be replaced by the student ID.

ssh username@biowulf.nih.gov

See here for student account assignment. Enter NIH credentials to see the student account assignment sheet after clicking the link.

After connecting to Biowulf, change into the data directory. Again, replace username with the student account ID.

cd /data/username

Biowulf does not keep data in the student accounts after class, so copy the folder unix_on_biowulf_2023_documents in /data/classes/BTEP to the present working directory, which should be /data/username.

cp -r /data/classes/BTEP/unix_on_biowulf_2023_documents .

Change into unix_on_biowulf_2023_documents.

cd unix_on_biowulf_2023_documents

Bioinformatics applications on Biowulf

Biowulf houses thousands of applications. To get a list of applications that are available on Biowulf use the module command its avail subcommand.

module avail

Use the up and down arrows keys to scroll through the list or use the space bar to scroll one page at a time. Hit q to exit the modules list and return to the prompt.

module spider also lists applications but displays results in a different format. Hit q to exit module spider.

Use module spider followed by the application name to search for a specific application. For instance, fastqc, which is used to assess quality of Next Generation Sequencing data.

module spider fastqc

Biowulf keeps the current version and previous versions of an application. The default is to load the current version. By default, Biowulf loads the latest version of a tool.

-------------------------------------------------------------------------------------------

fastqc:

-------------------------------------------------------------------------------------------

Versions:

fastqc/0.11.8

fastqc/0.11.9

-------------------------------------------------------------------------------------------

For detailed information about a specific "fastqc" package (including how to load the modules) use the module's full name.

Note that names that have a trailing (E) are extensions provided by other modules.

For example:

$ module spider fastqc/0.11.9

-------------------------------------------------------------------------------------------

To find out how to load FASTQC

module spider fastqc/0.11.9

-----------------------------------------------------------------------------------------------------------------------

fastqc: fastqc/0.11.9

-----------------------------------------------------------------------------------------------------------------------

This module can be loaded directly: module load fastqc/0.11.9

Help:

This module sets up the environment for using fastqc.

To find out what FASTQC does

module whatis fastqc

fastqc/0.11.9 : fastqc: It provide quality control functions to next gen sequencing data.

fastqc/0.11.9 : Version: 0.11.9

Working with Biowulf bioinformatics applications

This exercise will demonstrate how to use a Biowulf bioinformatics application called seqkit. The skills can be used for running other applications that are available on Biowulf.

What is seqkit?

module whatis seqkit

seqkit/2.1.0 : A cross-platform and ultrafast toolkit for FASTA/Q file manipulation in Golang

Before doing anything computationally intensive, request an interactive session.

sinteractive

Load seqkit or any other tool (tools will not load in the login node)

module load seqkit

Change into the SRR1553606 directory

cd SRR1553606

ls

There are two Next Generation Sequencing fastq files in this folder

SRR1553606_1.fastq SRR1553606_2.fastq

Use the stat subcommand of seqkit to get some statistics about the SRR1553606_1.fastq.

seqkit stat SRR1553606_1.fastq

file format type num_seqs sum_len min_len avg_len max_len

SRR1553606_1.fastq FASTQ DNA 10,000 1,010,000 101 101 101

Convert SRR1553606_1.fastq to fasta using the fq2fa subcommand of seqkit.

seqkit fq2fa SRR1553606_1.fastq

>SRR1553606.1 1 length=101

ATACACATCTCCGAGCCCACGAGACCTCTCTACATCTCGTATGCCGTCTTCTGCTTGAAAAAAAAAACAGGAGTCGCCCAGCCCTGCTCAACGAGCTGCAG

>SRR1553606.2 2 length=101

CAACAACAACACTCATCACCAAGATACCGGAGAAGAGAGTGCCAGCAGCGGGAAGCTAGGCTTAATTACCAATACTATTGCTGGAGTAGCAGGACTGATCA

Submitting jobs to the Biowulf batch system

For this portion of the class, change back to the /data/username folder

cd /data/username

Then make a new directory called SRP045416 and change into it.

mkdir SRP045416

cd SRP045416

In Biowulf, a swarm script can help with parallelization of tasks such as downloading multiple sequencing data files from the NCBI SRA study Zaire ebolavirus sample sequencing from the 2014 outbreak in Sierra Leone, West Africa in parallel, rather than one file after another. The example here will download the first 10000 reads the following sequencing data files in this study.

- SRR1553606

- SRR1553416

- SRR1553417

- SRR1553418

- SRR1553419

Create up a file called SRP045416.swarm in the nano editor

nano SRP045416.swarm

Copy and paste the following script into the editor.

#SWARM --job-name SRP045416

#SWARM --sbatch "--mail-type=ALL --mail-user=username@nih.gov"

#SWARM --partition=student

#SWARM --gres=lscratch:15

#SWARM --module sratoolkit

fastq-dump --split-files -X 10000 SRR1553606

fastq-dump --split-files -X 10000 SRR1553416

fastq-dump --split-files -X 10000 SRR1553417

fastq-dump --split-files -X 10000 SRR1553418

fastq-dump --split-files -X 10000 SRR1553419

In the swarm script above, the first four lines in the script start with #SWARM are not run as part of the script and are directives for requesting resources on Biowulf. The four swarm directives are interpreted as below:

- --job-name

- assigns job name (ie. SRP045416)

- --sbatch "--mail-type=ALL --mail-user=username@nih.gov"

- asks Biowulf to email all job notifications (replace username with NIH username)

- --gres

- asks for generic resource (ie. local temporary storage space of 15 gb by specifying lscratch:15)

- --module

- loads modules (ie. sratoolkit which houses

fastq-dumpfor downloading sequencing data from the Sequence Read Archive)

- loads modules (ie. sratoolkit which houses

After editing a file using nano, hit control-x to exit. When prompted to save, choose hit "y" to save.

To submit SRP045416.swarm

swarm -f SRP045416.swarm

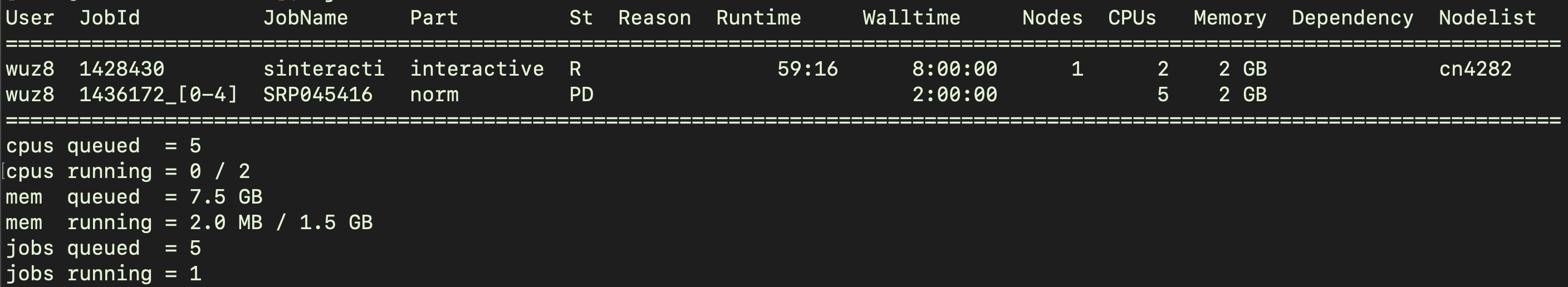

Use sjob to check job status and resource allocation. Figure 1 shows the information provided by sjob when SRP045416.swarm was submitted.

sjobs

Some important columns in Figure 1 include the following.

- JobID

- St, which provides the job status

- R for running

- PD for pending

- Walltime, which indicates how much time was allocated for the job

- Number of CPUs and memory assigned

Note that the swarm script was assigned job ID 1436172 and there are five sub-jobs as indicated by [0-4], which concords with the five commands in the script. Biowulf assigned 5 cpus (see cpus queued) and 7.5 gb of memory or 1.5 gb per sub-job (see mem queued) for the swarm script.

Figure 1: Use sjobs to check status and resource allocation after submitting a job to Biowulf.

After the swarm script finishes, use ls to list the contents of the directory. Use the -1 option to show one item per line.

ls -1

There are swarm log files with .e and .o extensions. Importantly, the fastq files were downloaded.

SRP045416_65452913_0.e

SRP045416_65452913_0.o

SRP045416_65452913_1.e

SRP045416_65452913_1.o

SRP045416_65452913_2.e

SRP045416_65452913_2.o

SRP045416_65452913_3.e

SRP045416_65452913_3.o

SRP045416_65452913_4.e

SRP045416_65452913_4.o

SRP045416.swarm

SRR1553416_1.fastq

SRR1553416_2.fastq

SRR1553417_1.fastq

SRR1553417_2.fastq

SRR1553418_1.fastq

SRR1553418_2.fastq

SRR1553419_1.fastq

SRR1553419_2.fastq

SRR1553606_1.fastq

An advantage of using command line and scripting to analyze data is the ability to automate, which is desired when working with multiple input files such as fastq files derived from sequencing experiments. A bash script can help obtain stats using seqkit for the fastq files that were just downloaded. Create a script called SRP045416_stats.sh.

nano SRP045416_stats.sh

Copy and paste the following into the editor.

#!/bin/bash

#SBATCH --job-name=SRP045416_stats

#SBATCH --mail-type=ALL

#SBATCH --mail-user=username@nih.gov

#SBATCH --mem=1gb

#SBATCH --partition=student

#SBATCH --time=00:02:00

#SBATCH --output=SRR045416_stats_log

#LOAD REQUIRED MODULES

module load seqkit

#CREATE TEXT FILE TO STORE THE seqkit stat OUTPUT

touch SRP045416_stats.txt

#CREATE A FOR LOOP TO LOOP THROUGH THE FASTQ FILES AND GENERATE STATISTICS

#Use ">>" to redirect and append output to a file

for file in *.fastq;

do seqkit stat $file >> SRP045416_stats.txt;

done

To submit this script

sbatch SRP045416_stats.sh

cat SRP045416_stats.txt

file format type num_seqs sum_len min_len avg_len max_len

SRR1553416_1.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553416_2.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553417_1.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553417_2.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553418_1.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553418_2.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553419_1.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553419_2.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553606_1.fastq FASTQ DNA 10,000 1,010,000 101 101 101

file format type num_seqs sum_len min_len avg_len max_len

SRR1553606_2.fastq FASTQ DNA 10,000 1,010,000 101 101 101

Explanation of the SRP045416_stats.sh script.

- Lines that start with "#" are comments and are not run as a part of the script

- A shell script starts with #!/bin/bash, where "#!" is known as the sha-bang following "#!", is the path to the command interpreter (ie. /bin/bash)

- Lines that start with #SBATCH are directives. Because these lines start with "#", they will not be run as a part of the script. However, these lines are important because they instruct Biowulf on when and where to send job notification as well as what resources need to be allocated.

- job-name: (name of the job)

- mail-type: (type of notification emails to receive, ALL will send all notifications including begin, end, cancel)

- mail-user: (where to send notification emails, replace with NIH email)

- mem: (RAM or memory required for the job)

- partition: (which partition to use; student accounts will need to use the student partition)

- time: (how much time should be alloted for the job, we want 10 minutes)

- output: (name of the log file)