Generative artificial intelligence (genAI) is a subset of AI that creates new content (e.g., text, images, code) in response to a user’s query (Lo, The Journal of Academic Librarianship ,2023). Common genAI tools include large language models (LLM) such as OpenAI’s GPT models, Anthropic’s Claude, Google’s Gemini, Meta’s Llama, and image-generation tools such as DALL-E. These models are trained using machine learning techniques such as deep neural networks that learn statistical relationships between words, pixels, or other data elements. When a user submits a query, the model predicts the most likely output and produces novel content based on patterns learned from training data. See “Generative AI exists because of the transformer: This is How it Works” to visualize how it functions.

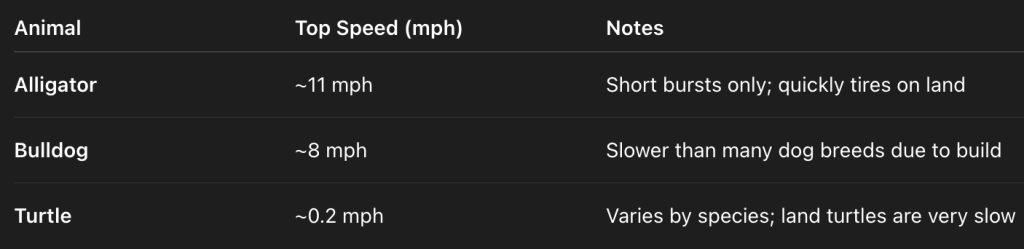

NIH scientists have at their disposal several AI tools free of charge. These include CHIRP and ChatGPT as well as CLAUDE at the enterprise level. Central to these tools is the chat box, where users can interact with them through natural language and they respond to user questions or demands. For instance, when ChatGPT is asked to “Make a table that summarizes the top running speed (in miles per hour) for alligators, bulldogs, and turtles”, it will refer back to its training data and generate a summary table for the user. This contrasts with researchers having to perform key word searches, collating, and summarizing information on their own.

This article will highlight some basic features for each tool and how NIH researchers can start using them.

CHIRP

As discussed in a previous topic spotlight, CHIRP is a Chatbot designed for the NIH Community. It is a secure, NIH-integrated tool that leverages LLM to provide natural language responses to your questions and tasks. See the CHIRP dedicated SharePoint site to learn more. To start using CHIRP go to https://chirp.od.nih.gov/. CHIRP was created to assist scientists with NIH specific research. It enables researchers to work with protected sensitive data such as unpublished work and de-identified clinical data. Personally Identifying Information, however, cannot be used on CHIRP.

ChatGPT

ChatGPT Enterprise is available to NIH scientists. To start using this tool, go to https://go.hhs.gov/chatgpt. See https://intranet.hhs.gov/ai/chatgpt to learn more. It is important to point out that ChatGPT should not be used for the following.

• Sensitive Personally Identifiable Information (SPII)

• Protected Health Information (PHI)

• Classified information

• Export Controlled Data

• Confidential Commercial Information or Trade Secret Data

Claude

To start using Claude, visit https://claude.hhs.gov/. Claude is FedRAMP approved so it can be used for many data types except for classified information. See https://intranet.hhs.gov/ai to learn about Claude enterprise.

Note that scientists will need to be on the NIH network either by being on campus or via VPN to use these generative AI tools. Authentication using NIH credentials is required for access. Google Chrome browser is recommended for these tool.

How Can Scientists Use Generative AI

Whether using CHIRP, ChatGPT, or Claude, NIH scientist can use generative AI to:

• Spark ethical debates in biomedical research.

• Upload and analyze documents.

• Draft documents and teaching material.

• Generate summary tables.

• Analyze tabular data.

• Generate or troubleshoot code as well as generate Quarto Markdown or Jupyter Notebooks.

• Generate workflow diagrams or other images.

Limitations

Generative AI can make mistakes, so always fact check the results. GenAI output is heavily dependent on user input (prompts); take care when generating prompts (see training opportunities below). Points of caution when using generative AI are listed.

• Chats will become slower as they get longer.

• Generative AI tools may show short term memory loss where replies from a couple of messages back were forgotten.

• Quality and time it takes to generate answers may depend on the model used.

• Fact check generative AI as they can hallucinate, which occurs when false or made-up information is given.

Education Opportunities

Classes:

At the fundamental level, generative AI changes the way information search is done and how scientist consume information. The NIH Library offer classes on the use of generative AI. Below are classes occurring in the first several months of 2026.

• AI Literacy: Navigating the World of Artificial Intelligence

• Best Practices for Prompt Generation in AI Chatbots

• Crafting Your Generative AI Usage Strategy: Lunch and Learn

• AI Update: What’s New in Artificial Intelligence

To learn about innovative ways in which AI is applied to biomedical research, checkout previous seminars from AI in Biomedical Research @ NIH Seminar Series on the BTEP Video Archive and check the BTEP calendar for future events.

Interest Groups:

NIH scientists can check out the following interest groups to learn more about generative AI practices and developments.

• Join the NIH GenAI Community of Practice Teams Channel and attend on of their monthly office hours to learn new developments in AI.

• Artificial Intelligence Interest Group

• Join the CHIRP Teams channel to catch on new developments.

Useful References:

- NIH Library’s guide to generative AI use

- The CLEAR path: A framework for enhancing information literacy through prompt engineering

- Generative AI Glossary

- CBIIT Generative AI Hub

- Frederick Lab AI Resource Hub

— Alex Emmons, PhD (BTEP), Amy Stonelake, PhD (BTEP), and Joe Wu, PhD (BTEP)